What does IT infrastructure mean? IT Infrastructure is a collection of hardware, services and software that are required to run an organization. We will discuss IT Infrastructure, its evolution throughout history and its components. The evolution and trends in information systems are of great importance in the rapidly changing IT industry

Highlights:

-

- What Is IT Infrastructure?

- Evolution of IT Infrastructure

- Present Scenario of IT Infrastructure

- AI And IT

- Trends in IT Infrastructure

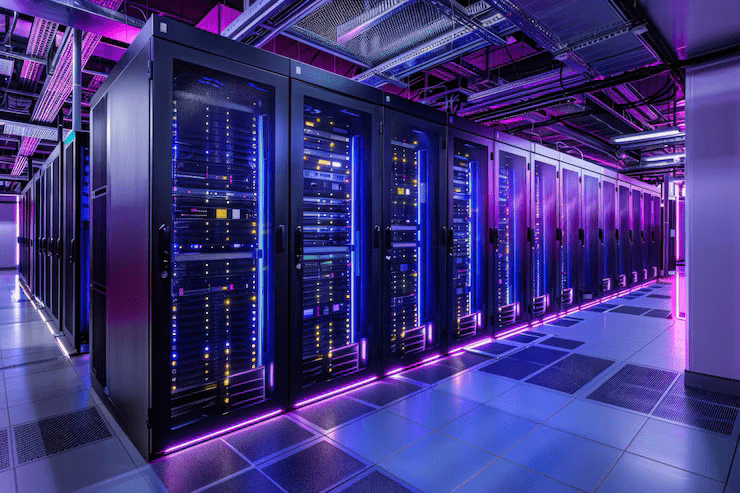

Definition of IT Infrastructure

Information technology infrastructure (IT infrastructure) is a collection of hardware and software required to run an entire organization.

This can also be defined as an entire set of services that are provided by the firm. These services include IT management, computer services and data management. An IT infrastructure is the basis for interacting with stakeholders in an organization.

The evolution of information technology is changing the functions of business. The pace of technological change is accelerating. The way companies design, develop and run technology infrastructure must change. Modern companies cannot function without an IT infrastructure that is flexible and responsive.

It is true that if the IT infrastructure is well-configured and networked it can improve efficiency and many processes. However, if it is not configured properly and is not well-networked it becomes an obstacle to growth and progress.

The IT infrastructure of today's companies is the product of more than 50 years of development and evolution of data storage, processing and transmission systems. It is important to know how the IT infrastructure has evolved in order to determine what it should look like.

We have highlighted below important periods which, to us, have helped shape the evolution of software, hardware, networks and data, all of which together form an IT infrastructure.

Evolution of IT Infrastructure

Computing platforms have evolved over the past 50 years in the IT infrastructure. The evolution is divided into five stages, each representing a different configuration of computing power and infrastructure elements. Here are the major stages of IT infrastructure evolution.

The General-Purpose Mainframe And Minicomputer Era

This mainframe era was basically a platform for computing that was highly centralized. During this time, computers were controlled and programmed by professional system operators with support from a single vendor.

IBM 1401 transistorized machines and IBM 7090 were created in 1959. The mainframe computer was born. IBM's dominance in mainframe computing has continued since 1965 with the IBM 360 series. The IBM 360 series provided multitasking, time-sharing and virtual memory. It was also the first mainframe computer to have a powerful operating systems.

Minicomputers eventually began to alter the mainframe pattern. DEC (Digital Equipment Corporation), in 1965, produced these minicomputers. These minicomputers were much more powerful than IBM mainframes. The price of the IBM mainframes was also lower. These minicomputers have features such as decentralized computing and the ability to customize them for business units.

Personal Computer Era:

In the 1970s, we can recognize the MITS Altair 8800 and the Xerox Alto as the first personal computers. These computers were only available to a limited number of computer users. IBM PC, introduced in 1981, was widely used in American business. Personal computers began with the DOS operating systems and a text command language. Later, Microsoft Windows was introduced. A computer with the Windows operating system and Intel processor became the standard for personal computers. In percentage terms, 90% of computers run Windows and 10% Macintosh.

Client/Server Era

Client/server computing is a form of computing where desktop and laptop computers are client computers, while computers that offer different services or features are called server computers. A server computer is also a computer running network software or a computer application. Server computers are now more powerful than personal computers. Server computers are usually powered by cheap chips, multiple processors within a single casing, or server racks.

Enterprise Computing Era

In the late 1990s, the internet evolved into a more trusted environment for communication. Because of this development, the organizations began to use Internet Protocol/Transmission Control Protocol (IP/TCP) networking standards heavily to combine their dissimilar networks together. This development has led to the IT infrastructure linking small networks, and various computer hardware, into a enterprise-wide network. Information will be able to freely flow within the organization as well as with other organizations. It can connect various types of computer equipment, such as mainframes and personal computers.

The Cloud And Mobile Computing Age

Cloud computing is the result of rapid internet development. Cloud computing is a computing model which provides access to shared computers, applications and services over a network. This is mostly done using the internet. Cloud data centers are connected to thousands of computers. Users can access these cloud data centers using any device that is connected, such as desktops, laptops, tablets, and smartphones.

Growth period

IT infrastructure is a key driver of technology for many sectors in the economy. Its influence is growing constantly, particularly when companies understand and realize that information is their most valuable resource and they must protect it.

The Whole Scenario of IT Evolution

Mainframe Computing is now mainstream

The IBM 360, launched in 1964 is widely regarded as the first modern mainframe. Mainframes were also becoming more mainstream at this time. It was an era of highly centralized computing, with all IT infrastructure elements being provided by one vendor. The IBM 360, a powerful computer capable of 229,900 calculations per second, could support thousands of remote terminals via proprietary data lines and communication protocols. The mainframe was the foundation of large-scale automation and efficiency for many of the largest companies in the world.

These systems were designed to serve multiple users via client terminals, but access was restricted to specialists. By the 1980s, however, mid-range systems, with new technologies like SUN’s RISC chip, began to emerge and challenge the dominance of mainframes.

It may sound a bit dated, but mainframes are now a platform for innovation in digital businesses. Globally, the mainframe market continues to grow at a CAGR 4.3% and is expected to reach $3 billion in 2025. This growth will be driven by the BFSI and Retail sectors.

The mainframes' longevity in a world that is often ephemeral stems from their evolution and adaptation to modern computing models, such as cloud computing. In fact, 23 of the 25 largest retailers in the US and 92 of the 100 biggest banks around the world still heavily rely on mainframes. In a 2017 IBM survey, almost half of the executives said that hybrid clouds with dual-platform mainframes enabled improved operating margins, accelerated innovation and reduced the cost of IT ownership. IBM's latest Z-15 mainframe range continues to push boundaries in mainframe capabilities, with its support for open-source software, cloud native development, policy-based controls and pervasive encryption.

PCs for democratizing computing

In the 1960s, the minicomputer was introduced. They not only provided more power, but also allowed for decentralization and customization - in contrast to the monolithic model of mainframe computer computing. It would be 1975 before Altair 8800, the first truly affordable personal computer, was released. Altair 8800 was not a minicomputer that could be used by the masses, as it needed extensive assembly. The availability of practical software, however, made it popular among computer enthusiasts and entrepreneurs. Apple I and II were also launched in the 70s, but they were still largely restricted to technologists.

The IBM PC was launched in 1981. (See above) This is considered the start of the PC age. The first PC was a personal computer from IBM that used an operating system developed by a little-known Microsoft company. It revolutionized computing and launched many standards for desktop computing. IBM PCs' open architecture paved the way for innovation and lowered prices, while allowing for new productivity software for word processing and spreadsheets.

Early on, many PCs were used in enterprise environments as standalone desktop systems, with no networking capabilities. This was due to a lack of hardware and software required for networking. This would not change before the 1990s, when the internet was introduced.

Networking PCs through Client-Server and Enterprise Computing Eras

In the 1980s, businesses began to realize that they needed to connect their PCs in order for employees and managers alike to collaborate and share resources. Client-server models allowed for truly distributed computing on PCs. This model allows each PC to be connected via Local Area Networks (LANs) to a powerful computer server that provides access to many services and capabilities. The server can be a mainframe, or a powerful configuration consisting of smaller inexpensive systems which offer the same functionality and performance as a mainframe for a fraction of the cost. The availability of software designed to allow multiple users access to the same data and functionality at the same time sparked this development. Client-server enabled collaboration and data sharing almost exclusively within an enterprise. However, it was possible to share data across multiple companies by making some extra effort.

The client-server model is still one of the core ideas in network computing. This approach to networking has several benefits, since the centralized architecture allows for greater control over provisioning, security, and access to multiple services. This popularity also led to the creation of Enterprise Resource Planning systems (ERPs) with a central database and modules for various business functions, such as finance and HR .

In the 1990s the client-server model migrated into enterprise computing, due to the necessity of integrating disparate networks and apps into a single enterprise-wide infrastructure. Due to the increasing popularity of the internet, companies began to use internet standards such as TCP/IP, and web services to unify their disparate networks, and to enable free information flow within and across business environments.

Cloud Computing: Disrupting Enterprise IT

Salesforce launched a CRM application for enterprise-level in 1999 as an alternative to traditional desktop software. Cloud computing had not yet entered the technological lexicon. However, its origins can be traced back to in late 1996, when Compaq executives envisioned a future with all business software moving to the web as "cloud computing enabled applications."

Three years later, Amazon joined the race, offering computing and storage solutions via the internet. The two events presaged a disruptive and transformative trend in IT, where the focus would shift away from hardware towards software. Cloud computing is now the standard for enterprise IT. Since then, Google, Microsoft and IBM have all joined the fray.

According to Gartner enterprise IT spending on cloud-based services will outpace non-cloud offerings until at least 2022. This trend is so strong, that Gartner has coined "cloud shift" as a term to describe IT spending that puts cloud first.

Cloud computing allows enterprise IT to access computing resources on demand, such as networks, servers and storage, applications and services without having to manage and maintain all of that hardware. Cloud services have an added cost advantage - businesses only pay for resources that they use.

Cloud services have introduced scalability in IT infrastructure vertically and horizontally. This eliminates all the headaches of optimizing on-premises solutions for overload and underutilization.

Cloud computing is more than just a cheaper alternative to on-premises systems. It can also be used as a key driver for innovation. Artificial Intelligence and Machine Learning, for instance ( AI/ML), are currently the technologies of choice for creating growth and competitive edge in enterprises.

If an enterprise wants to take advantage of these technologies, it can invest significant resources or sign up for MLaaS offerings (machine-learning as a cloud service) from their cloud provider.

As part of their portfolio, the majority of top cloud service providers now offer a variety of machine learning tools. These include data visualization APIs, facial recognition, Natural Language Processing and predictive analytics. Deep Learning is also available.

AWS, for example, allows customers to add advanced AI capabilities to their applications, such as image and videos analysis, natural languages, personalized recommendations and virtual assistants. Quantum computing is another theme, which we will explore in the following section.

IT Makes Quantum Leap

Quantum computing is the next evolutionary step in the evolution of information technology. It uses the properties from quantum physics for data storage and computation. Although this is a new technology, there is a serious race for supremacy. Google's research team demonstrated quantum supremacy in September 2019 by completing a task beyond the capabilities of the most powerful supercomputer.

The calculation would have taken the supercomputer 10,000 years to complete. This claim was met with a heavy dose of skepticism by IBM, their main competitor. IBM expressed their doubts about whether or not the quantum supremacy concept was correctly interpreted.

IBM is also aiming to demonstrate quantum computing capabilities across a wide range of problems and use cases. They believe that demonstrating the capability of quantum computing in conjunction with classical computers, is more relevant and important than celebrating moments of narrow superiority.

This intense competition does however help to accelerate the development of quantum computing, from an academic possibility to a commercial reality. It is estimated that spending will increase from $260 million in 2020 to $9.5 billion by the end the decade.

All the major tech companies are in the race to be the leader in quantum computing, including Microsoft and Intel. Quantum Circuits and Rigetti Computing also compete. VCs and private investors are joining the rush to quantum technology. Since 2012, at least 52 companies have been funded by VCs.

The interest of enterprises is also increasing. According to an IDC report, many companies in manufacturing, financial services and security are already " exploring more potential use-cases, developing advanced prototypes and being further along with their implementation status."

Due to this interest, quantum computing services are expanding quickly from specialized laboratories to the cloud. Many cloud service providers including IBM, Microsoft and Amazon now offer quantum computing as a cloud service.

Are we heading for a post silicon era of computing? It's not going to happen anytime soon. Quantum computing's unique processing abilities can certainly open up new opportunities. There will still be many scenarios in which classical computing is more productive and efficient than its more sophisticated counterpart.

A hybrid classical-quantum approach is likely to be the future of computing. Quantum computing has a long way to go before it becomes mainstream. It still faces many limitations, including those related to scope, stability, and scale.

Artificial Intelligence and Information Technology

Artificial Intelligence and Information Technology is a match made in heaven.

Artificial intelligence and information technologies are buzzwords used interchangeably in the wake of Industry 4.0. Artificial Intelligence has proven its importance by revitalizing information technology. It's amazing to see the transformation of information technology systems from dumb systems into smart ones in the blink of an eye.

It is not necessary to mention that the information technology industry revolves around software, data transmission, and computers. At the backend, it is artificial general intelligence that defines future technologies and applications using AI-assisted tech.

Artificial Intelligence and Information Technology

AI Artificial Intelligence is not the same as Information Technology.

AI and machine-learning aim to perform tasks similar to those performed by humans, such as learning, adapting and performing, processing data, and speech recognition. Information technology systems are built on evaluating data, storing it, capturing it, and analyzing it.

Information technology is limited in its use to data transmission and manipulation. AI-driven applications, on the other hand, work closely together to speed up problem resolution and improve IT operations. Artificial intelligence software is more intelligent in the IT environment.

How Artificial Intelligence meets Information Technology

Whether you agree or disagree, the majority of technological innovations are products of information technologies. The fusion of artificial technology with information technology is what is taming data complexity.

AI is undoubtedly the key to transforming the IT industry's systems into intelligent solutions that scale IT functionality. What makes them so intelligent? Robotic process Automation (RPA), software, and optimization are the two main functionalities of AI. Robotic process automation, combined with artificial intelligence (AI), replaces IT operations and tasks in order to automate data analysis and decision-making. What is the biggest benefit? The biggest benefit?

Still not convinced?

Look at the main applications of artificial intelligent services in Information Technology:

1) Data security:

Big data is a growing problem for data protection and cybersecurity. It's the Internet of Things, Cloud Computing that are responsible for the massive amount of data. How would you handle the privacy and security of data in IT information security across industries?

The AI system can create a multi-layer cybersecurity defense system to detect data breaches and vulnerabilities, and thus ensure the security and privacy for sensitive data. It also offers solutions and precautions to address security-related problems.

2) Building Better Information Systems

The foundation of any information system is an efficient, bug-free program. A framework for artificial intelligence uses algorithms to help programmers write better code. AI can improve development times by offering developers pre-designed algorithms based on their performance.

3) Process Automaton:

Deep learning networks are integrated into AI systems to automate the backend process. This reduces cost and saves time. AI algorithms can learn from their mistakes and optimize code to perform better. Artificial intelligence automation is a great way to reduce the problems with UI tests, thereby speeding the delivery cycles.

Advantages of Artificial Intelligence and Information Technology

Prior to deploying software, an IT system's main focus is on ensuring quality and development speed. AI systems are able to predict during the creation of software prototypes, which can be used to overcome the flaws in the software development and deployment process.

The following are some of the main benefits of AI integration in Information Technology:

By leveraging the strategic insights that can be derived from artificial-intelligence data ( prediction analysis), architects, developers and IT managers will be able to make faster decisions.

Predictive analysis can reduce deployment times dramatically through artificial intelligence tools, as developers do not need to wait until last-stage deployment.

AI is a revolution in the modern IT world. AI allows computers to process large amounts of data using Natural Language Processing, and then perform specific tasks.

AI-powered software testing helps speed up regression testing. The and QA departments are able to identify errors quickly when the development team releases a new version of code. This reduces the time it takes for the test run.

Trends in IT Infrastructure

I&O leaders are faced with a mixture of traditional and transformative challenges.

Infrastructure & Operations (I&O), leaders, should be focused on 10 key technologies trends that will support their initiatives.

During the Gartner Data Center Infrastructure & Operations Management Conference held in Las Vegas, David Cappuccio, a vice president and distinguished Gartner analyst, said that technology trends that affect I&O can be divided into three categories: strategic, tactical, and organizational.

Cappuccio says that these trends will all have a direct impact on the way IT services are delivered to businesses over the next five-year period. IT leaders must understand the emerging trends and their cascading effect on IT operations. This can have a significant impact on IT strategy, planning, and operations.

Strategic

Trend 1: The disappearance of data centers

Gartner estimates that, by 2020 more computing power will be sold by cloud infrastructure providers (IaaS and PaaS), than it would have been in enterprise data centers. Most enterprises, unless they are very small, will continue to use on-premises data centers. As the majority of compute power moves to IaaS, vendors and enterprises need to focus their efforts on managing and leveraging hybrid architectures that combine on-premises and off-premises computing, cloud and non cloud.

Trend 2: Interconnect fabrics

The data center interconnection fabric will deliver on the promise that the data center is software-defined and dynamic. The ability to monitor and manage workloads in a dynamic manner, or to quickly provision LAN and WAN service through an API opens up a wide range of possibilities.

Trend 3: Microservices, Containers and Application Streams

Containers (e.g. Containers (e.g. Containers are a great way to implement isolation per process, making them ideal for microservices. Microservices are a set of services built as a series of small processes that communicate via lightweight network-based mechanisms. Microservices are easily deployed and managed, and, once they're inside containers, have very little interaction with the OS.

Tactical

Trend 4: Business Driven IT

Gartner's recent surveys show that business units are responsible for up to 29 percent (and this number will continue to grow) of IT spending. Business-driven IT is often used to bypass traditional IT processes that are slow. In today's business world, it is designed more to give technically-savvy people the ability to implement new ideas quickly and adapt to new markets or enter them as easily as possible.

IT leaders are aware that business-driven IT is a valuable asset to an enterprise. Their role, therefore, should be to maintain relationships with the key stakeholders of the business, so that central IT can be kept informed about new projects and their long-term impact on operations.

Trend 5: Data Centers as a Service

IT leaders must create a model of data center as a service (DCaaS), where IT and data centers are responsible for delivering the best service at the correct pace from the right provider at the appropriate price. IT becomes a service broker.

IT leaders should enable cloud services for the entire business. However, they must ensure that the IT service and support is not compromised.

Trend 6: Stalling Capacity

Both on-premises data centers and cloud services have stranded capacity, or things that were paid for but never used. IT leaders need to learn how to not only focus on availability and uptime, but also capacity, utilization, and density. This can help extend the lifespan of a data center, and lower operating costs for providers.

Trend 7: Internet of Things

The Internet of Things will transform the way future data centers are managed and designed as large volumes of devices send data to agencies, departments, and enterprises around the globe, whether they do so continuously or periodically. I&O should hire an IoT architect to look at both the IoT strategy and data center's long-term goals.

Organizational

Trend 8: Remote Device Management

The need to centrally manage remote assets is a growing trend among organizations that have remote offices and sites. The need to manage remote assets centrally is a growing trend for many organizations with remote offices or sites.

Connect sensors are a new asset introduced by the rapid adoption of IoT technologies. Sensors may require firmware updates or battery replacements on a periodic basis. This would add a level of control and detail to an asset tracking system.

Trend 9: Micro and Edge Computing Environments

Micro and edge computing is used to execute real-time applications requiring high-speed responses at the nearest edge servers. Communication delays are reduced to milliseconds instead of several hundred milliseconds. It offloads some of the computation-intensive processing on the user's device to edge servers and makes application processing less dependent on the device's capability.

Trend 10: New roles in IT

Infrastructure and Operations will need to adapt as IT adopts these trends. First and foremost, will be the IT cloud broker, responsible for monitoring/management of multiple cloud service providers.

The IoT architect will then be assigned the task of understanding the impact that multiple IoT systems could have on data centers. The architect will work with the business units, ensuring that their closed-loop IoT systems are compatible with the central IoT Architecture or that they use common protocols and data structure.

Conclusion:

IT is a term that refers to the entire spectrum of technologies used to create data, process it, store it, protect it, and exchange. Modern IT is a result of six decades of constant and significant innovation. The evolution of IT infrastructure can be divided into five distinct stages: the mainframe, the personal computer, the client/server age, enterprise computing and cloud computing. Quantum computing is bringing about a new revolution in computing.

Quantum computing is the next evolutionary step in the evolution of information technology. It uses the properties of quantum mechanics to store and compute data. Although this is a relatively new technology, there is a serious race for supremacy.